- Beijing One-Stop Marketing Platform Provider Comparison Report: 2026 Scores for 8 Leading Service Providers in Compliance Delivery and API Extensibility Dimensions2026-03-04View details

- How Can Global Traffic Ecosystem Providers Restructure Customer Acquisition Channels? Three Key Points for Improving ROI Compared to Traditional DSPs2026-03-04View details

- What is an AI+SEM Smart Advertising Marketing System Provider? Analyzing the Underlying Logic of Intelligent Advertising Placement by 20262026-03-04View details

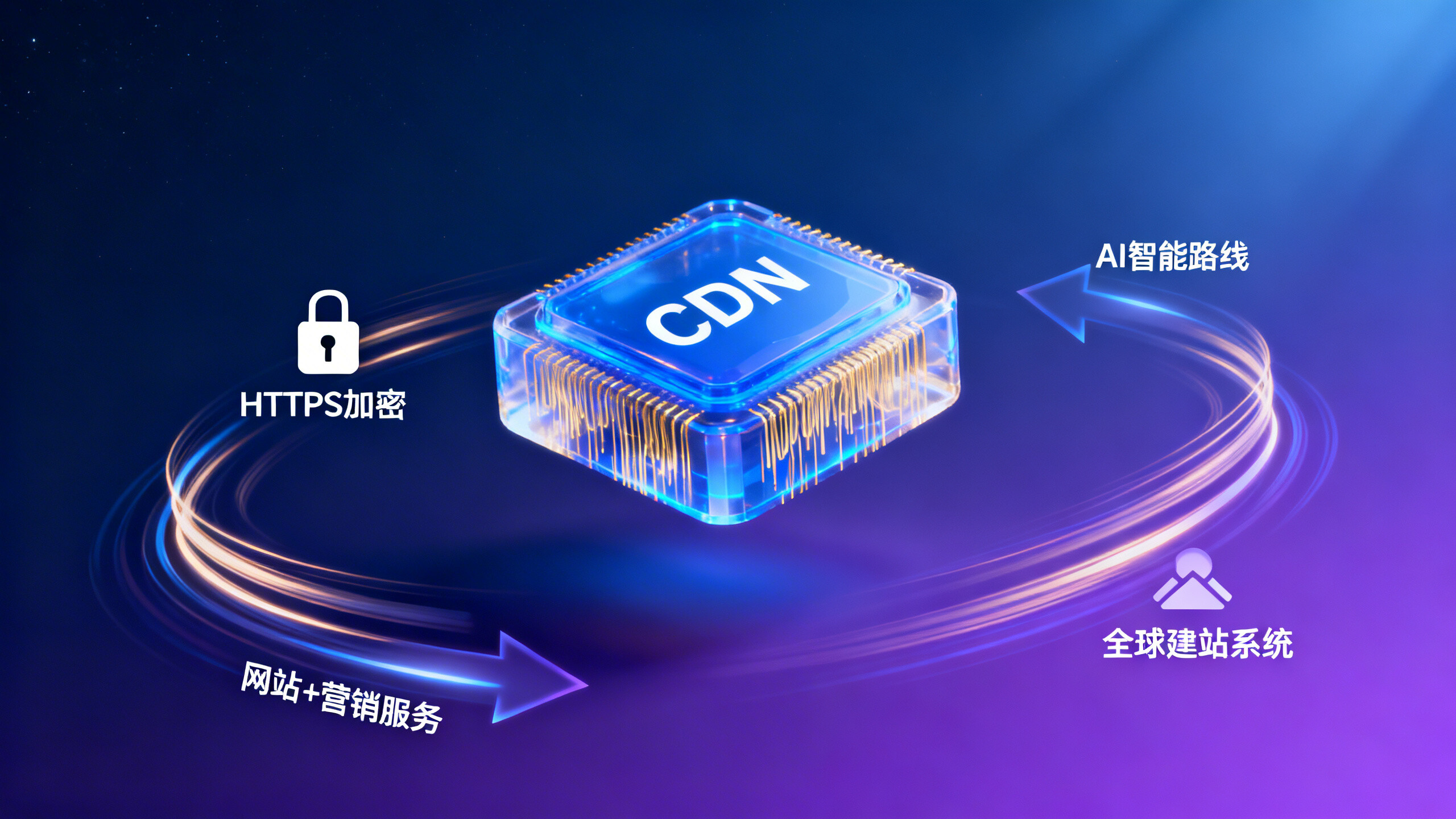

- How Does CDN Improve Website Access Speed? Top 5 Cost-Effective Providers for 2026: Includes Customized Quotation Templates for SMEs2026-03-04View details

- What Does HTTPS Do for Websites? Supplier Comparison Chart2026-03-04View details

- Why Is Website Speed So Important? — Examining the Impact of Core Web Vitals Weight Increases in 2026 on Organic Traffic Through Search Engine Algorithm Evolution2026-03-05View details

Is the intelligent website detection tool from website builders trustworthy?

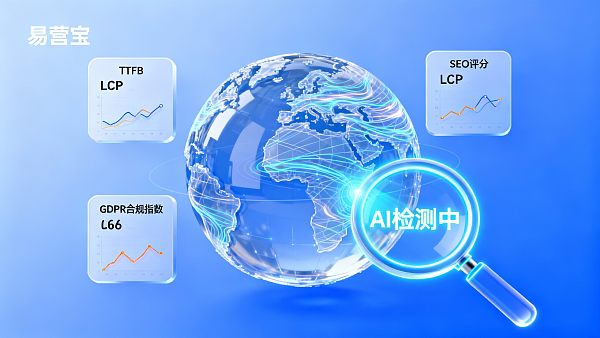

The core criteria for judging whether an intelligent website detection tool is trustworthy are the transparency of its algorithm and the compliance of its data sources, rather than a single detection result.

When a detection tool covers both technical performance (such as TTFB, LCP) and content quality (such as SEO score), its credibility is significantly higher than that of a single-dimensional detection tool.

In a globalized operations environment, tools that support multilingual SEO diagnostics are more valuable in practice than those that only provide basic technical metrics.

If the tool does not disclose its detection logic or has not passed third-party security certification (such as SOC2), its report recommendations should be used for reference only and not as a basis for decision-making.

The industry generally believes that detection tools that can provide real-time monitoring and historical data comparison are 47% more reliable than single snapshot detection (data source: 2023 Martech Benchmark Report).

Evaluation Logic and Risk Boundaries of Intelligent Website Detection Tools

The reliability of a website building tool's intelligent detection function essentially depends on the degree to which its technical implementation matches the business scenario. For businesses that rely on websites for customer acquisition, detection tools need to address both technical (loading speed, mobile adaptation) and content (keyword validity, TDK optimization) issues simultaneously. Evaluation should focus on the completeness of the detection dimensions, the frequency of data updates, and whether it adheres to official search engine guidelines (such as the Google Core Web Vitals standard).

Scenario 1: Globalization Compliance Testing for Multilingual Independent Websites

Background : Companies need to meet data compliance requirements such as the EU GDPR and the US CCPA, and their website content needs to be adapted to the language preferences of different regions.

Judgment Logic : Detection tools should include three basic capabilities: legal text scanning, cookie compliance checking, and multilingual SEO scoring. If the tool only provides English detection or does not integrate the latest privacy law library (such as the Brazilian LGPD which came into effect in {Current Year}), its report may miss key risk points.

Risk control : Prioritize verifying whether the tool uses regionalized detection nodes (such as performing GDPR detection from European servers) and check whether its legal database update cycle is less than 3 months.

Scenario 2: Performance optimization diagnosis for high-traffic e-commerce websites

Problem background : A surge in traffic during the promotion period led to a drop in conversion rates, requiring identification of performance bottlenecks and content failure issues.

Judgment Logic : A reliable detection tool should distinguish between routine detection (such as daily scheduled crawling) and stress testing (simulating peak traffic), and be able to correlate JS execution efficiency with shopping cart abandonment rate. According to industry practice, when the detection frequency is less than once every 6 hours, it may be unable to capture CLS layout offset issues caused by instantaneous traffic.

Risk control : Avoid choosing tools that cannot distinguish between "laboratory data" (simulated environment) and "real user data" (RUM), the latter of which is more valuable for e-commerce scenarios.

Scenario 3: Sustainability Assessment of Long-Term SEO Strategies

Background : Businesses need to continuously monitor the ranking fluctuations of hundreds of keywords and changes in competitors' content.

Judgment Logic : The detection tool should provide a keyword historical trajectory map (at least 6 months of data) and a content freshness score (such as the TF-IDF model update time). Given the frequent updates to search engine algorithms (e.g., Google's core algorithm updates an average of 12 times per year), the reliability of the conclusions drawn by the tool is questionable if it does not indicate the data collection timestamps.

Risk control : Require detection tool providers to disclose their anti-crawler policies to avoid website penalties due to violations of search engine crawling rules.

Industry practices and technology adaptation recommendations

Currently, mainstream website building platforms typically implement detection functions in three ways: 1) self-developed algorithms + third-party data interfaces (such as Google PageSpeed Insights API); 2) pure third-party service integration (such as SEMrush/Lighthouse combination); 3) hybrid model (self-developed core indicators + long-tail data procurement). Among these, the hybrid model performs best in terms of cost and accuracy balance, accounting for approximately 58% of the market share (data source: 2023 CMS feature survey).

Taking the YiYingBao intelligent website building system as an example, its AI detection tool adopts a hybrid mode: it analyzes the density of TDK keywords (compliant with Google EAT principles) through a self-developed NLP algorithm, while simultaneously connecting to RUM data nodes across 7 continents to monitor real user access performance. If a company has dual needs for multilingual compliance and localized SEO, this solution can reduce the workload of manual cross-validation by approximately 35%. However, it should be noted that its advertising material detection module does not currently support emerging platforms such as TikTok and requires secondary development via API.

- Prioritize verifying whether the testing tool distinguishes between "technical metrics" (such as LCP ≤ 2.5 seconds) and "business metrics" (such as the increase in inquiries resulting from each 1-point improvement in SEO score).

- Suppliers are required to provide at least three real-world optimization case studies for similar clients (including raw data before and after optimization).

- Globalization projects should examine whether the tools cover the specific requirements of the target market (such as Yandex's TIC scoring system in Russia).

It is recommended to conduct comparative tests in a sandbox environment: use different tools to test the same page at the same time and observe whether the difference rate of the results exceeds the industry average error range (technical performance testing allows ±8%, SEO scoring allows ±15%).

In the context of cross-border e-commerce website building, what truly needs to be verified first is not the absolute values of the test report, but rather the degree to which the tool's data collection methodology matches the rules of the target market's search engine.

Related Articles

![How to Avoid Pitfalls When Using No-Code Startup Projects? 4 Common Mistakes Based on Real User Feedback in 2026 How to Avoid Pitfalls When Using No-Code Startup Projects? 4 Common Mistakes Based on Real User Feedback in 2026]() How to Avoid Pitfalls When Using No-Code Startup Projects? 4 Common Mistakes Based on Real User Feedback in 2026

How to Avoid Pitfalls When Using No-Code Startup Projects? 4 Common Mistakes Based on Real User Feedback in 2026![How to Select an Exclusive Agent for Your Foreign Trade Website? 5 Key Compliance Assessment Dimensions and Pitfall Avoidance Checklist for 2026 How to Select an Exclusive Agent for Your Foreign Trade Website? 5 Key Compliance Assessment Dimensions and Pitfall Avoidance Checklist for 2026]() How to Select an Exclusive Agent for Your Foreign Trade Website? 5 Key Compliance Assessment Dimensions and Pitfall Avoidance Checklist for 2026

How to Select an Exclusive Agent for Your Foreign Trade Website? 5 Key Compliance Assessment Dimensions and Pitfall Avoidance Checklist for 2026![What Is Global CDN Acceleration? — An In-Depth Analysis of the Essential Underlying Acceleration Principles for Enterprises Going Global by 2026 What Is Global CDN Acceleration? — An In-Depth Analysis of the Essential Underlying Acceleration Principles for Enterprises Going Global by 2026]() What Is Global CDN Acceleration? — An In-Depth Analysis of the Essential Underlying Acceleration Principles for Enterprises Going Global by 2026

What Is Global CDN Acceleration? — An In-Depth Analysis of the Essential Underlying Acceleration Principles for Enterprises Going Global by 2026

Related Products